Edited (version 113)

Label Recognition for Herbarium

(14) Search batches of herbarium images for text entries related to the collector, collection or field trip

Team 14 is using machine-learning to search for patterns on digitised #herbarium vouchers, to label entries related to the collector, collection or field trip. Data by Herbaria of UZH & ETHZ > #GLAMhack2022 @usys_ethzh @eth @ETH_en @UZH_Science @UZH_ch @UZH_en @alg2115 @liowalter pic.twitter.com/zzoL6ZFQd5

— Opendata.ch (@OpendataCH@mastodon.social) (@OpendataCH) November 5, 2022

Goal

Set up a machine-learning (ML) pipeline that search for a given image pattern among a set of digitised herbarium vouchers.

Dataset

United Herbaria of the University and ETH Zurich

Team

- Marina

- Rae

- Ivan

- Lionel

- Ralph

Problem

Imaging herbaria is pretty time- and cost-efficient, for herbarium vouchers are easy to handle, do not vary much in size, and can be handled as 2D-objects. Recording metadata from the labels is, however, much more time-consuming, because writing is often difficult to decipher, relevant information is neither systematically ordered, nor complete, leading to putative errors and misinterpretations. To improve current digitisation workflows, improvements therefore need to focus on optimising metadata recording.

Benefit

Developing the proposed pipeline would (i) save herbarium staff much time when recording label information and simultaneously prevent them from doing putative errors (e.g. attributing wrong collector or place names), (ii) allow to merge duplicates or re-assemble special collections virtually, and (iii) would facilitate transfer of metadata records between institutions, which share the same web portal or aggregator. Obviously, this pipeline could be useful to many natural history collections.

Case study

The vascular plant collection of the United Herbaria Z+ZT of the University (Z) and ETH Zurich (ZT) encompasses about 2.5 million objects, of which ca. 15% for a total of approximately 350,000 specimens have already been imaged and are publicly available on GBIF (see this short movie for a brief presentation of the institution). Metadata recording is, however, still very much incomplete.

Method

- Define search input, either by cropping portion of a label (e.g. header from a specific field trip, collector name or stamp), or by typing the given words in a dialog box

- Detect labels on voucher images and extract them

- Extract text from the labels using OCR and create searchable text indices

- Search for this pattern among all available images

- Retrieve batches of specimens that entail the given text as a list of barcodes

Examples

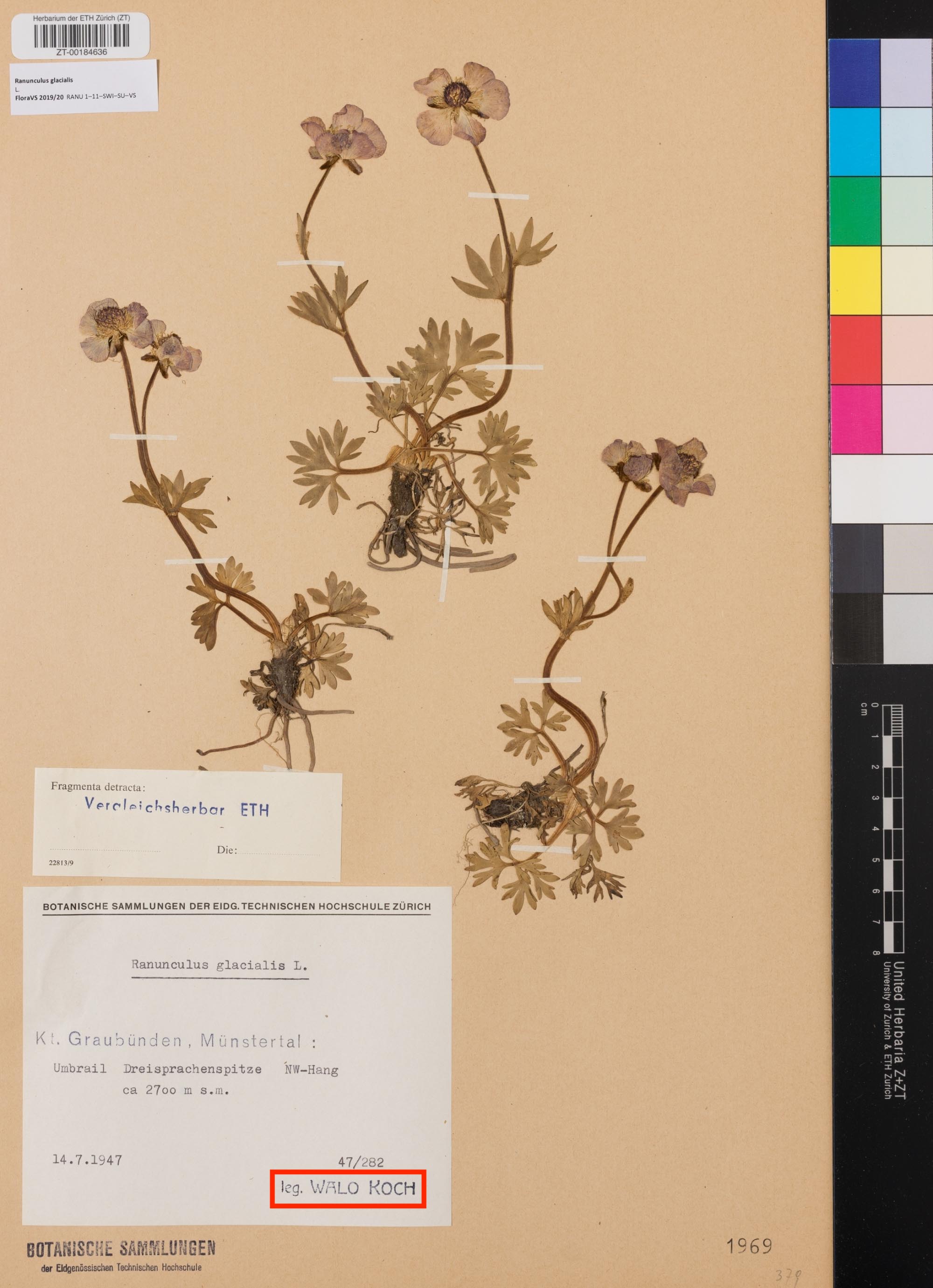

Here are some images of vouchers, where what could typically be searched for (see red squares) is highlighted.

a) Search for "Expedition in Angola"

b) Search for "Herbier de la Soie"

c) Search for "Herbier des Chanoines"

d) Search for "Walo Koch"

e) Search for "Herbarium Chanoine Mce Besse"

Problem selected for the hackathon

The most useful thing for the curators who enter metadata for the scanned herbarium images would be to know which vouchers were created by the same botanist.

Vouchers can have multiple different labels on them. The oldest and most important one (for us) was added by the botanist who sampled the plant. This label is usually the biggest one, and at the bottom of the page. We saw that this label is often typed or stamped (but sometimes handwritten). Sometimes it includes the word 'leg.', which is short for legit, Latin for 'sampled by'.

After that, more labels can be added whenever another botanist reviews the voucher. This might include identifying the species, and that could happen multiple times. These labels are usually higher up on the page, and often handwritten. They might include the word 'det.', short for 'determined by'.

We decided to focus on samples that were sampled by the botanist Walo Koch. He also determined a lot of samples, so it's important to distinguish between vouchers with 'leg. Walo Koch' and 'det. Walo Koch'.

Data Wrangling documentation

How did we clean and select the data ?

Selected tool

We decided to use eScriptorium to do OCR on the images of the vouchers. It's open source and we could host our own instance of it, which meant we could make as many requests as we wanted. We found out that there is a Python library to connect to an eScriptorium server, so hopefully we could automate processing images.

Setting up escriptorium on DigitalOcean

eScriptorium lets you manually transcribe a selection of images, and then builds a machine-learning model that it can use to automatically transcribe more of the same kind of image. You can also import a pre-existing model. We found a list of existing datasets for OCR and handwriting transcription at HTR-United, and Open Access models at Zenodo.

From the Zenodo list, we picked this model as probably a good fit with our data: HTR-United - Manu McFrench V1 (Manuscripts of Modern and Contemporaneous French). We could refine the model further in eScriptorium by training it on our images, but this gave us a good start.

Video Demo

Presentation

Google Drive folder

Tools we tried but where we were not successful

Transkribus

https://readcoop.eu/transkribus/. Not open source but very powerful. Not easy to use via an api.

Open Image Search

The pipeline imageSearch developed by the ETH Library. The code is freely available on GitHub and comes with a MIT License.

We were not able to run it, because the repository is missing some files that are necessary to build the Docker containers.

Github

See also our work on Github, kindly shared by Ivan.

Event finished

Research

Preparing the finale presentation...

Project

Joined the team

Joined the team

We have set up an instance of eScriptorium that's available online, and we've found a collection of images that might be relevant to our question. Now we're training an OCR model on those images in eScriptorium.

Repository updated

Joined the team

Joined the team

Event started

Joined the team

Repository updated

Joined the team

First post View challenge